OpenAI’s Sora video tool recently encountered controversy following an unexpected leak by testers. A group of testers released access publicly, protesting alleged unfair treatment by OpenAI. This action led to a temporary suspension of Sora, drawing attention to ethical concerns in AI collaborations.

What is Sora AI?

Sora AI, developed by OpenAI, is an advanced video-generation tool powered by artificial intelligence. It allows users to create visually rich and realistic videos from detailed text prompts. The tool aims to serve as an innovative resource for filmmakers, artists, and creative professionals.

How did the leak happen?

A group of artists with early access to OpenAI’s Sora leaked the tool. They shared Sora publicly on Hugging Face, allowing temporary use of the video generator. This act was aimed at expressing frustration with OpenAI’s testing program and its practices.

Sora’s early testers provided unpaid feedback, enhancing the tool for its eventual release. OpenAI responded by halting the program to address the unauthorized access. The incident brought attention to ethical concerns in creative partnerships involving AI technologies.

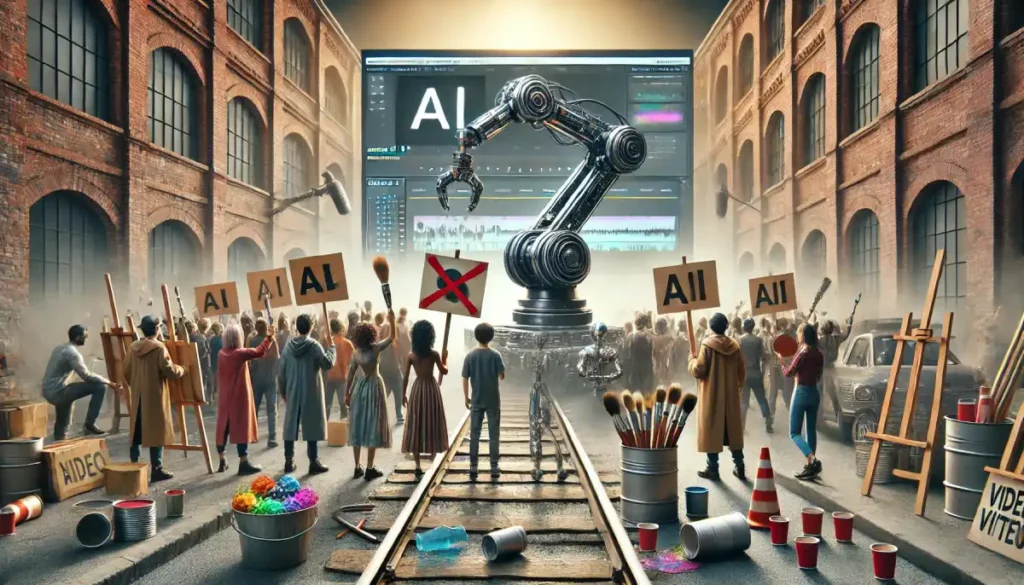

What concerns did the artists raise?

The artists had concerns about OpenAI’s approach to involving them in Sora’s testing. They argued that their contributions were undervalued, as they provided unpaid labor while refining the tool. This sense of exploitation grew from the imbalance between their efforts and OpenAI’s significant financial resources.

Participants emphasized the unfair compensation model, where most testers received no meaningful financial support. OpenAI offered limited incentives, such as film screenings for select participants, which felt insufficient. The artists compared this to the company’s massive marketing benefits derived from their unpaid efforts.

The group accused OpenAI of prioritizing public relations over genuine artist collaboration and support. They felt pressured to publicly endorse the tool’s benefits, sidelining critical feedback. This frustration culminated in a public protest, aiming to shift OpenAI’s focus toward fair treatment and transparency.

How did OpenAI respond?

OpenAI acted swiftly to address concerns by pausing access to Sora after the leak. The company emphasized that Sora remains in research preview and is still under development. OpenAI acknowledged the contributions of its testers while reiterating their commitment to safety and innovation.

In their statement, OpenAI highlighted the voluntary nature of the alpha testing program. They defended their decision to provide free access, coupled with grants and exclusive opportunities for select participants. The company assured continued support for artists through events and future initiatives to promote creativity.

OpenAI acknowledged the challenges in balancing creativity with fair practices during Sora’s testing phase. They expressed a commitment to refining the program with input from diverse perspectives. By addressing feedback, they aim to ensure Sora becomes a useful and secure tool for creators.

What implications will the protests have?

The protest highlights the growing tension between artists and AI developers over ethical concerns. Many creative professionals feel undervalued, especially when their contributions are used for commercial gain. This incident underscores the pressing need for fair treatment and compensation in such collaborations.

AI advancements continue to create friction with traditional creative industries, particularly regarding intellectual property and labor practices. Artists often worry that their unique skills are being overshadowed by AI’s mass production capabilities. This clash emphasizes the importance of promoting mutual respect and shared benefits between AI companies and creators.

The incident serves as a wake-up call for AI firms to reconsider their engagement strategies. Ethical missteps can erode trust and alienate talented contributors in the creative sector. Establishing transparent processes and equitable partnerships could help mitigate such disputes in the future.

What does the future of Sora look like?

OpenAI faces a critical moment in determining the future direction of Sora. The company must address artists’ concerns by establishing transparent practices and fair compensation models. Without these changes, promoting trust with creative communities may remain a significant challenge.

To succeed, OpenAI needs to focus on refining Sora’s capabilities while ensuring ethical implementation. Building stronger partnerships with artists could pave the way for better collaborations. These steps may ultimately transform Sora into a tool that benefits both creators and the industry.

The development of Sora offers opportunities to enhance creative processes and redefine content production. By addressing ethical concerns and improving program inclusivity, OpenAI can set industry standards. The path forward requires balancing technological innovation with meaningful support for artistic contributors.

How did Meta react to Sora?

Meta’s recent introduction of its Movie Gen tool highlights an alternative approach to AI development. By collaborating with established filmmakers and creators, the program ensures valuable input while maintaining professional boundaries. This strategy contrasts with OpenAI’s approach, where testers voiced concerns about fairness and compensation.

Movie Gen’s limited public availability emphasizes gradual testing and controlled feedback collection. This measured rollout indicates a preference for structured collaborations over widespread access. These contrasting strategies underscore differing priorities in balancing innovation, ethics, and artist partnerships.

What lessons can we learn from the Sora’s leak incident?

The Sora incident reflects the importance of ethical practices in AI development and testing. Companies must create clear guidelines to ensure transparency and fairness when engaging creative professionals. Such measures can prevent misunderstandings and build trust between organizations and contributors.

Compensating testers appropriately should be a priority for organizations using external contributors in research programs. Offering fair remuneration can encourage meaningful participation and align with community expectations. This helps maintain long-term collaboration and positive perceptions of the technology.

Balancing innovation with ethical practices is essential to promote mutual respect in creative partnerships. Developers should prioritize clear communication and inclusive feedback during the testing phases. By addressing these principles, companies can avoid conflicts and ensure the equitable development of their tools.